Hi, my name is Jacob Wat and I am a rising junior at Northwestern University. I’m majoring in mechanical engineering with a minor in computer science. This summer, I’ve been working with Erik Dahl, a chemical engineer who is conducting experiments regarding electrospinning and roll to roll manufacturing. As a mechanical engineer, my project deals with the design of a new testing apparatus to increase efficiency and usability.

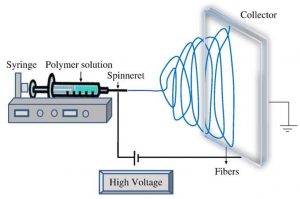

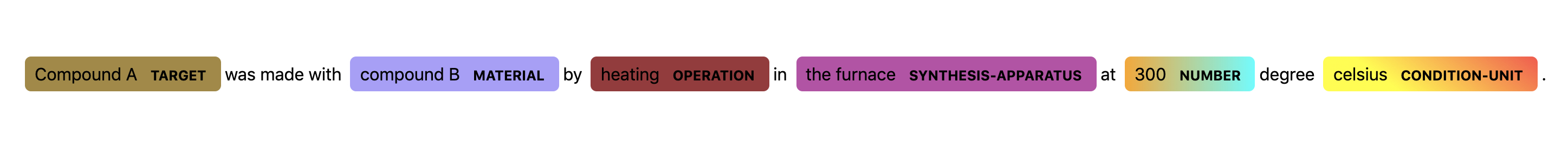

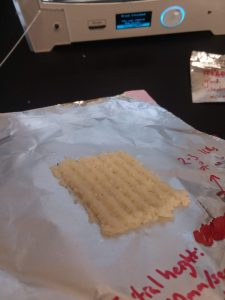

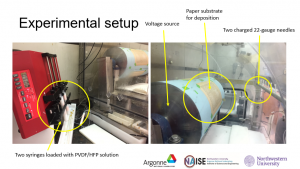

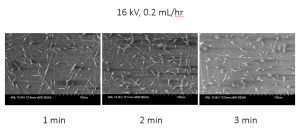

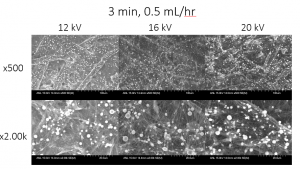

Electrospinning is a method used to create nanofibers via a polymer solution in syringes, as seen in Figure 1. As the syringe slowly pumps out, a high voltage will draw out the nanofibers to create a deposition on the grounded collector. The collector can change depending on the use; they can be circular or rectangular, flat surface or wires, etc. At Argonne, we use a rectangular, wired collector which is useful for the characterization of nanofibers and one way they are doing that is by taking x-rays of the material at the Advanced Photon Source (APS) to determine different attributes of the material.

Figure 1. Basic electrospinning setup.

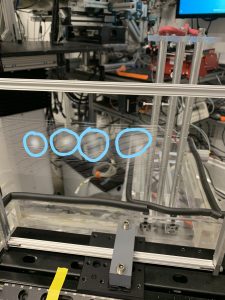

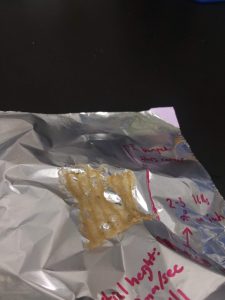

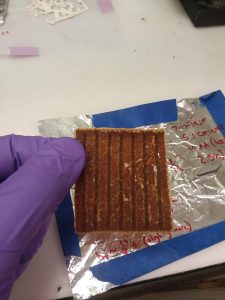

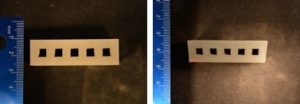

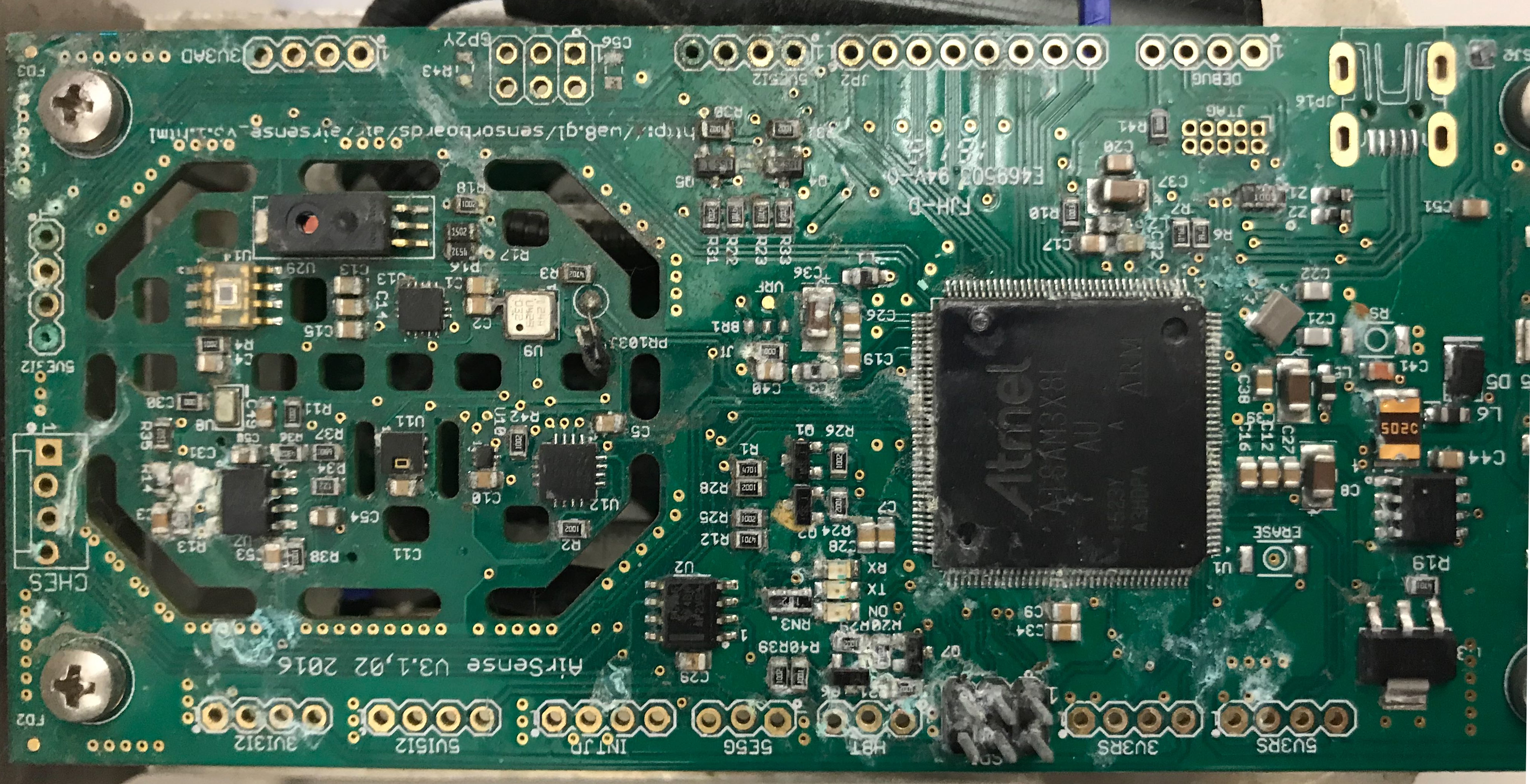

The setup I’m designing for is the APS electrospinning experiments. In Figure 2, you can see that the current implementation has a removable wire collector. It needs to be replaced and the nanomaterial (circled) needs to be cleaned after every iteration. All in all, a very tedious and inefficient method. In order to speed up experimentation, we are designing an apparatus that can run continuously after being initialized in tension.

Figure 2. Picture of wire collector with spun nanofibers present.

With the new electrospinning setup, the most important aspect we were designing for included the continuous lateral movement of the wires, thus allowing users to rotate the spools to a new section of wire remotely. Accordingly, there would be no need to have user oversight; you could start running the experiment, set the motor for the wires to slowly replace the used sections, and leave it to run on its own while continuously collecting data. In addition, many other features had to be redesigned to accommodate this new setup. For example, one of the key parts of electrospinning is the grounding of the collectors (in this case, the wires). To accomplish this, we used grounding bars with notches cut in them to control the spacing between each wire. This solves the problem of grounding and wire spacing with one element of the design. Another important consideration is maintaining tension in the wire. This is achieved via a spring/shock absorber type part that will push against one of the grounding bars so that each wire will always remain in tension. However, each wire has to be individually tensioned before the experiment can begin.

For future development, a prototype should be made and tested at the APS because there are very specific space requirements that have not been dealt with yet. Once it can replace the current implementation, the next step will be to improve the user experience. This could include introducing a method for tensioning all wires at the same time instead of having to do it individually or even go so far as to clean sections of wire to be reused.

References:

Urbanek, Olga. “Electrospinning.” Laboratory of Polymers & Biomaterials, http://polybiolab.ippt.pan.pl/18-few-words-about/17-electrospinning.

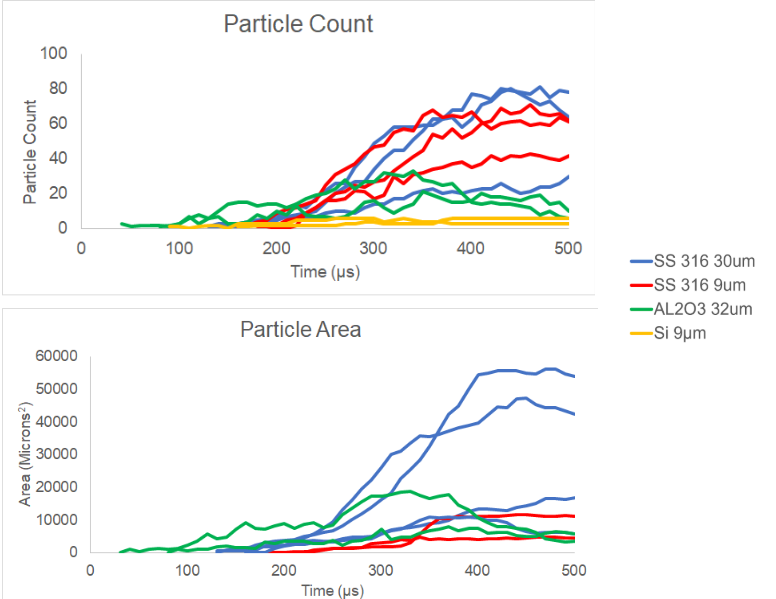

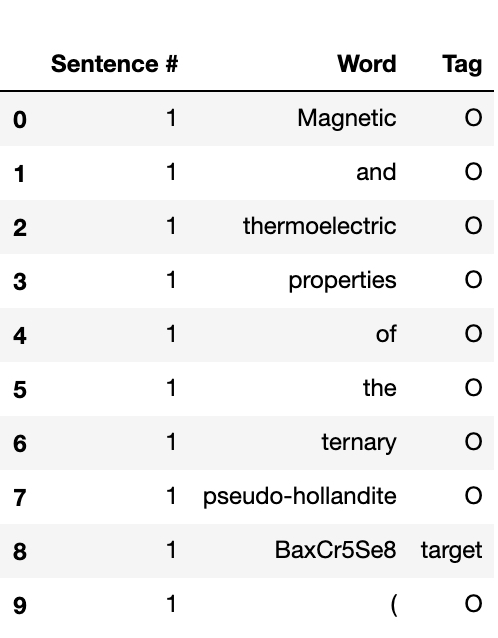

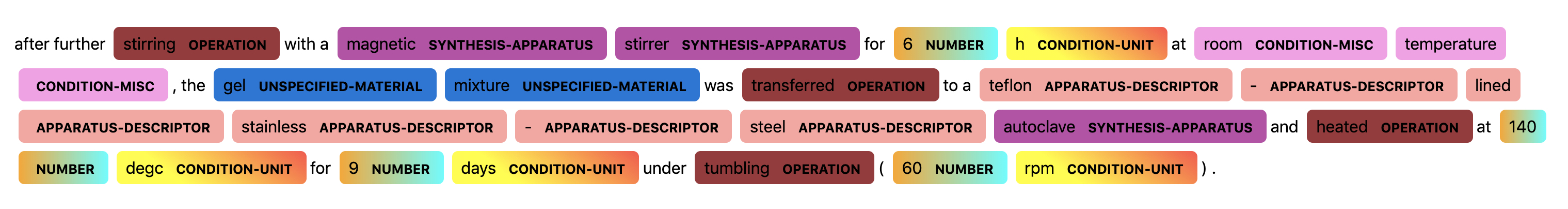

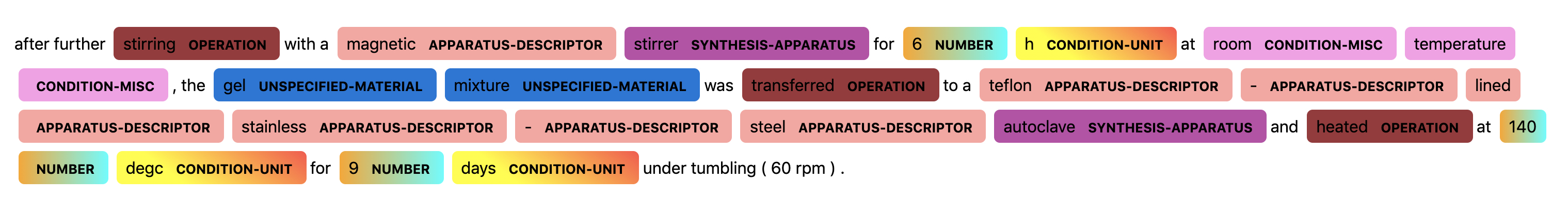

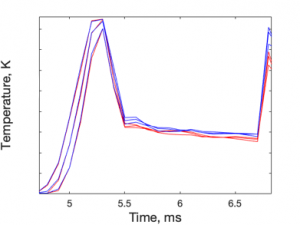

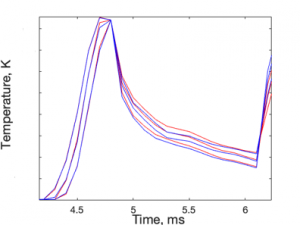

porosity formed no porosity

porosity formed no porosity