Protons, specifically proton beams, are increasingly being used to treat cancer with more precision. To plan for proton treatment, X-ray computed tomography (X-ray CT) is typically used to produce an image of the tumor site — a process that involves bombarding the target with photon particles, measuring their energy loss and position, and then using projection methods to establish the 3D shape of the target.

A new imaging method, which employs protons instead of photons, promises to deliver more accurate images while subjecting the patient to a lower dose of radiation. Proton computed tomography (pCT) employs billions of protons and multiple computationally intensive processes to reconstruct a 3D image of the tumor site. To achieve the required accuracy would take a long time on a single computer, and it’s not clinically feasible to require a patient to sit still for a long period to be imaged, or to wait a day for the images to be produced.

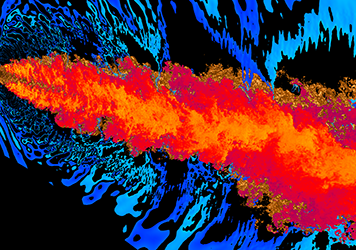

A group of computer scientists at Argonne and at Northern Illinois University has been working to accelerate pCT imaging using parallel and heterogeneous high performance computing techniques. The team so far has developed the code and tested it on GPU clusters at NIU and at Argonne with astounding results — producing highly accurate 3D reconstructions from proton CT data in less than ten minutes.

Image Credit: FermiLab