Hello! My name is Zachary Martin, and I am an undergraduate Materials Science & Engineering student at Northwestern. This summer I am working with Dileep Singh in the field of additive manufacturing (AM), and my project focuses on minimizing/controlling dimensional distortions created during the sintering process of binder jet printing, a promising powder printing technique.

The binder jet printing process is capable of fabricating entire complex parts by repeatedly infiltrating layers of loose powder with a liquid binder, holding the targeted region of powder together until postprocessing, forming a “green part” that is later densified and bonded through sintering.

What sets binder jet printing apart from the many other AM technologies is that this first step of creating a “green part” involves only small temperature variations. Other techniques, including the heavily researched Selective Laser Melting (SLM), form final parts in the printing bed through the use of large inputs of energy to rapidly melt and bond each layer of powder together, which introduces a large temperature gradient across the surface of the part. These gradients lead to the development of internal stresses within the component, lowering the performance of the final part.

Binder jetting avoids these problems by creating a green part at a relatively constant temperature, before heating this entire component simultaneously during sintering to ensure the development of temperature gradients within a sample is minimized. Specifically, the sintering process of binder jet printing uses high temperatures to first remove the binder material, and then densify the powder into a final part. By densifying the part simultaneously, sintering promotes the creation of three-dimensional bonding, eliminating issues of anisotropy and yielding parts with bonding structures closer to those found in traditional manufacturing feedstock.

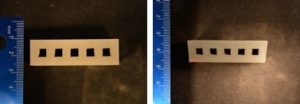

While able to significantly increase the mechanical performance of final parts, sintering conditions must be carefully controlled to promote the densification of a part, while minimizing the propagation of undesired creep and uneven shrinkage at high temperatures. The process requires parts to be heated to high temperatures for an extended period of time, allowing for the development of notable creep in final parts, which my project works to reduce. Additionally, temperature gradients within the furnace can lead to unequal rates of shrinkage across a part, leading to dimensional warpage. These dimensional distortions can be seen in final samples, demonstrated by the angled outside edges and disrupted channels in Figure 1 below.

Figure 1: Dimensional changes to channeled green part before (left) and after (right) sintering

In previous studies, warpage of the final part has been counteracted by infiltrating parts with ceramics or other metals. One group reduced distortions by introducing ceramic nanoparticles to fill voids present in a steel powder crystal structure, which greatly blocked creep over the sintering process. Another group introduced additional metals with a lower melting temperature than the powder feedstock, which showed a similar reduction in creep. These solutions, however, alter the performance and properties of final parts, as the structures is no longer purely steel.

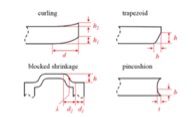

Throughout the course of my project, my research focuses on altering the temperature and time conditions of the sintering process to quantify their effects on the overall warpage of a final part. Additionally, by changing sample orientation during sintering, I can identify trends that minimize the effect of gravity and temperature gradients on a part. To systematically quantify the warpage present, I use a combination of ASTM dimensional guidelines and distortion measuring methods expressed in previous additive manufacturing papers. This allows samples to be empirically compared in respect to warpage in each dimension. The guidelines I use are visualized below in Figure 2.

Figure 2: Classification of warpage and dimensions measured in a final part

Going forward, the group will move onto the creation of channeled parts using a ceramic material, which must be created using binder jet printing since the high temperatures required bond the powder cannot be achieved using SLM or other laser-based techniques. The results from my research will reveal optimal processing conditions to minimize creep and temperature gradients during the sintering process, and will provide a basis for which to quantify the results of the final ceramic part.

References

[1] S. Allen, E. Sachs, “Three-Dimensional Printing of Metal Parts for Tooling and Other Applications”, METALS AND MATERIALS, vol. 6, no. 6, pp. 589-594, 2000.

[2] L Grant, M. Alameen, J. Carazzone, C. Higgs, Z. Cordero, “Mitigating Distortion During Sintering of Binder Jet Printed Ceramics”, Solid Freeform Fabrication Symposium, 2018.

porosity formed no porosity

porosity formed no porosity