Hello there! My name is Lyon Zhang, and I am a rising junior studying Computer Science at Northwestern. This summer I am working with Jakob Elias on a variety of projects with a broad goal of using networking and artificial intelligence techniques to automate visual analysis of varying manufacturing methods. The most extensive and ambitious of these is Laser Powder Bed Fusion, a 3D printing technique using a thin layer of metallic powder.

As a short summary, Laser Powder Bed Fusion (LPBF) is an additive manufacturing technique that uses a laser beam to melt a thin layer of metallic powders through to the base below. This method is tremendously useful, similar to other 3D printing techniques, because of its ability to facilitate the automated production of geometrically complex and minuscule parts. However, the high energy of the laser, scattered nature of the powder bed, and dynamic heating and cooling patterns result in a chaotic process that easily form defects in defect-sensitive components. For a more detailed overview of LPBF, see Erkin Oto’s post below.

The current method of analyzing defects (specifically, keyhole porosity as explained by Erkin) is simple manual inspection of X-Ray images. Once deformities have been spotted, a researcher must personally sync the X-Ray frame up with its corresponding infrared data. Like any analytical process that involves human judgment, this method is time consuming and somewhat prone to errors, even for the best researchers.

Thus, the first step was to create a tool that could assist with immediate research needs, by providing fast, locally stored data, synced images with informational charts, and dynamic control over the area of interest:

Figure 1: Demo interface with fast data pulling and pixel-precise control

One flaw with the above interface is that the infrared values do not inherently contain accurate temperature values, and thus these must be computed manually. In the interface, we are using a pre-set scale that is not necessarily accurate, but is still useful for visualization. However, for precise research on LPBF, exact temperature data is needed to gauge the physical properties of the materials used.

Once again, the process of calibrating exact temperature values is currently done by visual identification of the melt pool in X-ray images combined with knowledge of material melting point. This method is thus entirely subject to the researcher’s intuition:

Figure 2: X-ray video of powder bed during fusion. The chaotic heating, cooling, and pressure differentials cause distortion and volatile powder particles.

The disorderly nature of the LBPF process lends itself to a consistency problem – one researcher may have different opinions from another on the correct location of the melt pool in any given experiment. Even the same researcher’s intuition certainly suffers minor variations from day to day. To this end, any sort of automation of this visual identification problem would immediately provide the benefit of consistency from experiment to experiment.

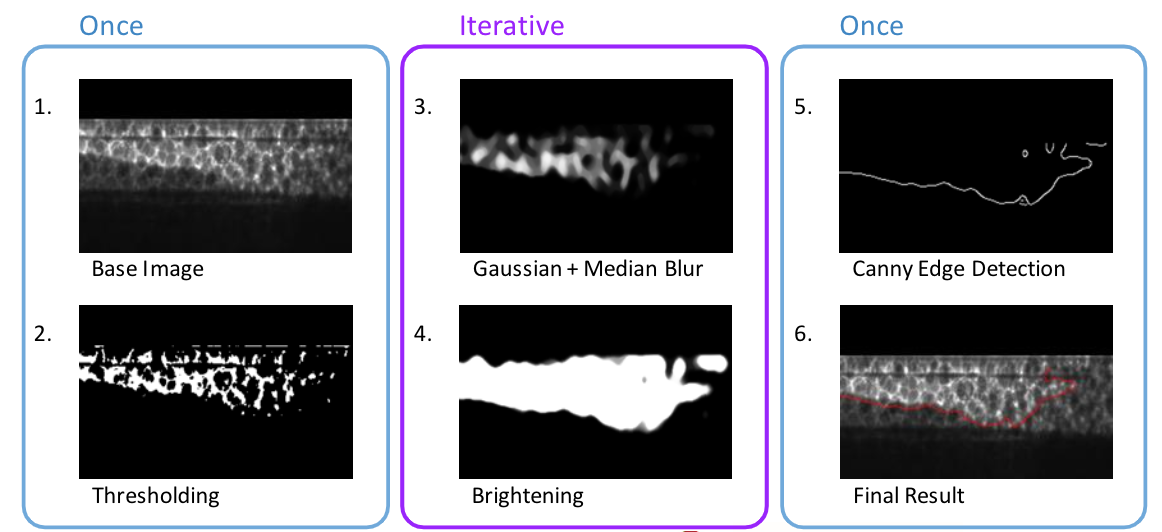

The automated visual identification of these image sets takes advantage of the different textures and brightness levels of each region, and incorporates these with assumptions about the location of the melt pool relative to said regions. An experimentally discovered sequence of brightness thresholding, Gaussian blurring, median blurring, brightening, and Canny edge detection culminate in semi-accurate region detection for individual images:

Figure 3: Process of region detection for individual images.

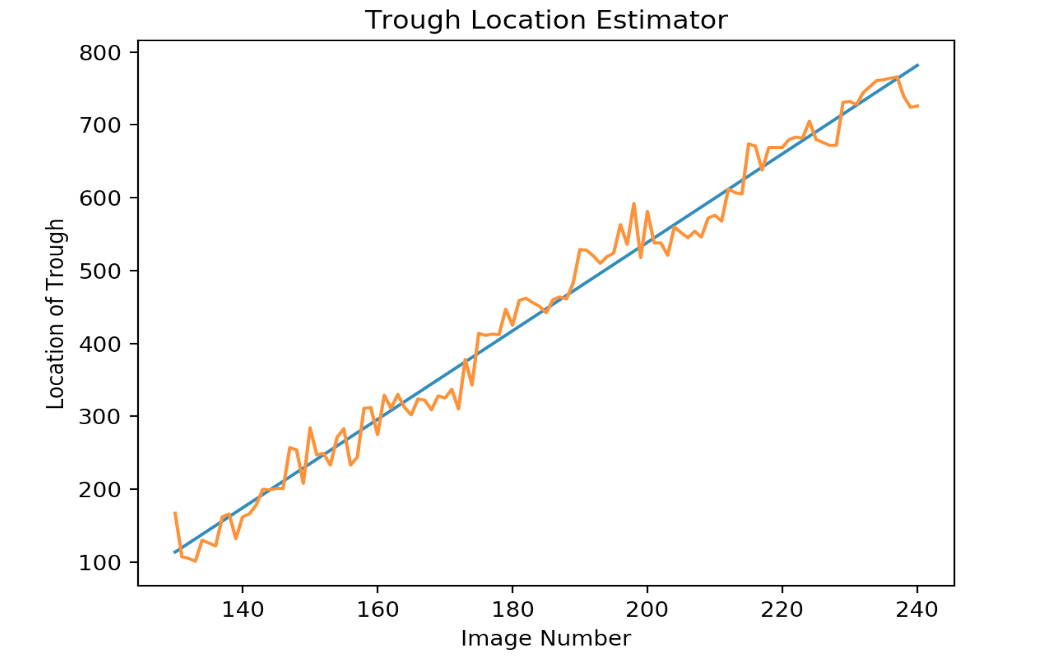

This process is quick (approx. 1.5 seconds for all ~100 images), and accurate at first glance. However, putting all the processed images together in sequence reveals that the detected bottom of the melt pool is actually quite chaotic. Fortunately, this has a relatively simple solution, which takes advantage of high image count in order to generate a smoothed path using mean squared error regression. With ~100 images contributing, this estimation (with a researcher inputted offset) is almost guaranteed to accurately emulate the true path of the laser.

Figure 4: (Top) True detected location of melt pool bottom (red) and smoothed estimate of laser path (blue). True pixel values of detected bottom location and the least mean squared error line that returns rate of movement (bottom).

From there, it’s a relatively simple process to match the points demarcating the melt pool on the X-ray image to the corresponding points on the IR images, using the known geometry of the images:

Figure 5: Melt pool bounds on X-ray image (right) and corresponding area on the IR images (left).

While already useful in providing consistency, speed, and accuracy in melt pool detection for LPBF, the process still contains steps that require manual input and should be automated in the future. For example, the existing sequence of image processing techniques used to detect the melt pool was iteratively developed simply by entering successive combinations into the processing script. Many experiments are conducted with the same X-ray camera settings and thus should use the same image processing techniques. If a researcher could label the correct laser positions on just a few image sets, it would be trivial for a machine learning model to discover the best combination for use on many following experiments.

Another crucial issue is that although many experiments are run with the same camera settings, not all are. Thus, given different image sets, the optimal image processing parameters might need modifications on a non-trivial timescale. Another potential avenue for future development would be to create a classifier that could determine the image type based on image features, and then select the correct set of processing parameters as determined using the method above.

These two further developments alone could turn this project into a useful tool for the visual analysis of all LBPF experiments, not limited by trivialities such as researcher bias and X-ray imaging settings. Once integrated with other LBPF analysis research performed this summer, this computer vision project has the potential to help form the basis for a powerful tool in LBPF defect detection and control.

References:

- Zhao C. et al. “Real-time monitoring of laser powder bed fusion process using high-speed X-ray imaging and diffraction.” Nature Research Journal, vol. 7, no. 3602, 15 June 2017

- Jinbo Wu, Zhouping Yin, and Youlun Xiong “The Fast Multilevel Fuzzy Edge Detection of Blurry Images.” IEEE Signal Processing Letters, Vol. 14, No. 5, May 2007

Appreciate the recommendation. Will try it out.|