Digitized museum collections are the next ‘big data’ dataset

Digitized museum collections are the next ‘big data’ dataset

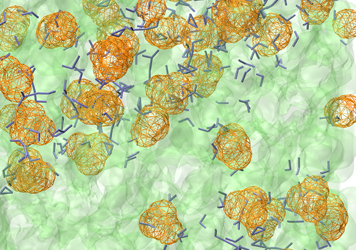

The Field Museum of Natural History in Chicago holds a massive pinned insect collection of roughly 4.5 million specimens, dating back at least a century and contributed by entomologists and private collectors from all over the world, including scientists at the Field. The collection currently occupies over ten thousand drawers of new high-density storage that can be opened as needed for study.

The collection, which continues to grow, represents a wealth of data for studies of taxonomy, biodiversity, invasive species and so on, and a significant public investment in research and applied environmental science. Digitizing the collection would not only preserve it, but also make it accessible to such studiers of the collection without having to visit the Field. However, no systems exist for digitizing large collections of objects, making large-scale analysis of the subsets of such collections extremely difficult.

Argonne scientists Mark Hereld and Nicola Ferrier are collaborating with Field Museum associate curator Petra Sierwald to devise an advanced pipeline for high-throughput digitization of the collection using a software-based approach. The bar is high: to digitize a collection this big in one year of 24/7 operations, the average time available to capture a single specimen is 7 seconds. Another challenge is deciphering the labels beneath each pinned specimen– labels that are closely packed, often partially obscured, and in many cases, hand-written. Moreover, the specimens are fragile and can’t be manipulated.

This summer, two students working with Hereld and Ferrier experimented with image-capture techniques to accommodate the different angles needed to quickly sample everything on the labels and extract from the target label information. The image pre-processing methods the team eventually develops will enable automatic reconstruction of label data. The team is working on methods to identify and track the drawers, unit boxes, and individual specimens through the digitization pipeline.

This is a difficult problem. Different approaches are more or less robust at finding correct solutions, depending on lighting, geometry, and details of the object being measured. The team is exploring a range of image analysis and 3D capture techniques, expecting the best solution to be a combination of existing and new algorithms.

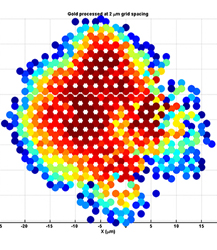

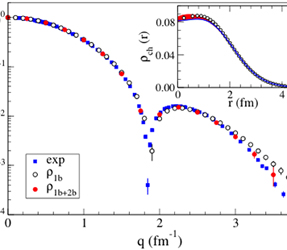

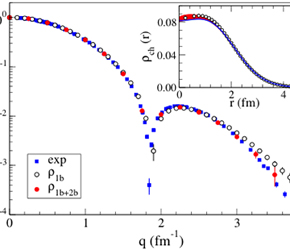

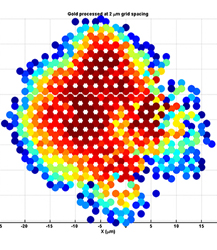

Experimentalists from all over the world visit Argonne each year to use the ultra-bright X-ray photon beams produced here to peer inside materials. Research teams with beamline reservations, or ‘beamtime,’ are expected to set up, calibrate the detector, and man their experiments round-the-clock for days at a stretch. Any problem with the experimental setup is revealed only during the analysis phase, often rendering the entire dataset useless. Even a seemingly minor thing like a bad cable can cause terabytes of useless data. That’s a problem.

Experimentalists from all over the world visit Argonne each year to use the ultra-bright X-ray photon beams produced here to peer inside materials. Research teams with beamline reservations, or ‘beamtime,’ are expected to set up, calibrate the detector, and man their experiments round-the-clock for days at a stretch. Any problem with the experimental setup is revealed only during the analysis phase, often rendering the entire dataset useless. Even a seemingly minor thing like a bad cable can cause terabytes of useless data. That’s a problem.